Nov. 24, 2020

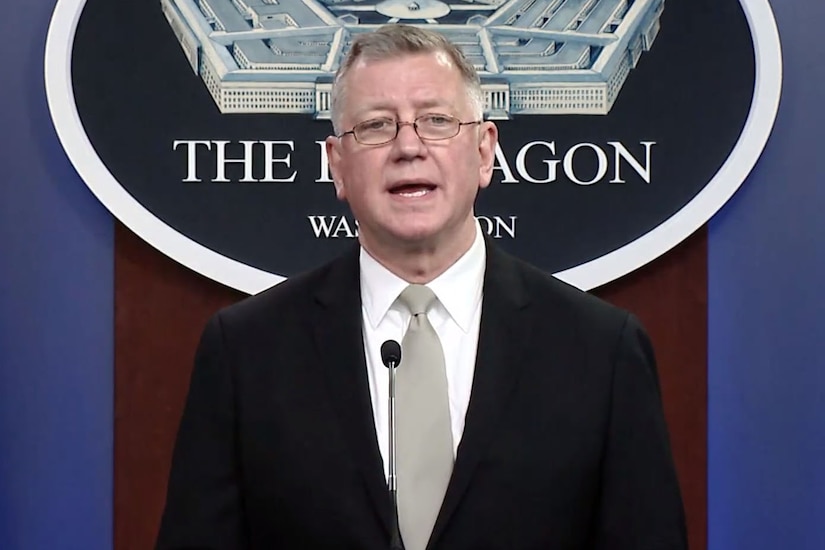

U.S. Marine Corps Lieutenant General Michael S. Groen, Director, Joint Artificial Intelligence Center

LIEUTENANT GENERAL MICHAEL S. GROEN: Okay, Good

afternoon, welcome. I'm Mike Groen, Lieutenant Colon— Lieutenant

General, United States Marine Corps. I'm the new Director of the Joint

Artificial Intelligence Center, the JAIC. I'm very glad for the

opportunity to interact with you, look forward to our conversation

today.

It's my great privilege to serve alongside the members of the JAIC

but also the much larger numbers across the department that -- that are

committed to changing the way we decide, the way we fight, the way we

manage, and the way we prepare.

It's clear to me that we do not have an awareness problem in the

department, but like with any transformational set of technologies, we

have a lot of work to do in broadly understanding the transformative

nature and the implications of AI integration.

We're challenged not so much in finding the technologies we need but

rather to get – to getting about the hard work of AI implementation.

I've often used the analogy of the transformation into Industrial Age

warfare, of literally lancers riding into battle against guns that were

machines, flying machines that scouted positions or dropped bombs, of

massed long range artillery machines or even poison gas to use as a

weapon, used as a weapon, at an industrial scale.

That transformation that had been underway for decades suddenly

coalesced into something very lethal and very real. Understanding that

came at great cost. Another example is blitzkrieg, literally lightning

war, that leveraged technology known to both sides to create – but – but

was used by one side to create tempo that overwhelmed the slower, more

methodical force.

In either case, the artifacts of the new technological environment

were plain to see in the society that surrounded the participants.

These transformational moments were imminently foreseeable but in many

cases not foreseen.

I would submit that today we face a very similar situation. We're

surrounded by the artifacts of the Information Age. We need to

understand the impacts of this set of globally available technologies on

future of warfare. We need to work hard now to foresee what is

foreseeable.

We have a tech-native military and civilian workforce that enjoys a

fast-flowing, responsive, and tailored information environment, at home

when they're on their mobile phones. They want that same experience in

the militaries and department systems that they operate. Our

warfighters want responsive, data-driven decisions. Our commanders want

to operate at speed and with a mix of manned and unmanned

capabilities. The citizens seek efficiency and effectiveness from their

investments in defense. Artificial intelligence can unlock all of

these.

We're surrounded by examples in every major industry of data-driven

enterprise, that operate with speed and efficiency, that leaves their

competitors in the dust. We want that. Most important of all, we need

to ensure that the young men and women who go in harm's way on our

behalf are prepared and equipped for the complex, high tempo

battlefields that – of the future.

I often hear that AI is our future, and I don't disagree with that,

but AI also needs to be our present. As an implementation organization,

the JAIC will continue to work hard with many partners across the

department to bring that into being.

So let me just talk a little bit about our priorities in the JAIC today, and you can ask questions.

In JAIC 1.0, we helped jumpstart AI in the DOD through pathfinder

projects we called mission initiatives. So over the last year, year and

a half, we've been in that business. We developed over 30 AI products

working across a range of department use cases. We learned a great deal

and brought a – on board some of the brightest talent in the business.

It really is amazing.

When we took stock, however, we realized that this was not

transformational enough. We weren't going to be in a position to

transform the department through the delivery of use cases.

In JAIC 2.0, what we're calling our -- our effort now, we seek to

push harder across the department to accelerate the adoption of AI

across every aspect of our warfighting and business operations. While

the JAIC will continue to – to develop AI solutions, we're working in

parallel to enable a broad range of customers across the department. We

can't achieve scale without having a broader range of our – of

participants in the integration of AI. That means a renewed focus on

the Joint Common Foundation (JCF), which most of you are familiar with,

the DevSecOps platform that – and the key enabler for AI advancement

within the department. It's a resource for all, but especially for

disadvantaged users who don't have the infrastructure and the tech

expertise to do it themselves.

We're – we 're recrafting our engagement mechanism inside the JAIC to

actively seek out problems and help make others successful. We will be

more problem pull than product push.

One thing we note is that stovepipes don't scale, so we will work

through our partners in the AI Executive Steering Group and the -- and

the subcommittees of that group, to integrate and focus common

architectures, AI standards, data-sharing strategies, educational norms,

and best practice for AI implementation. We'll continue to work across

the department on AI ethics, AI policy, AI governance, and we'll do

that as a community.

We'll also continue to work with like-minded nations to enhance

security cooperation and interoperability through our AI partnership for

the – for defense. All of the JAIC’s work comes back to that enabling,

that broad transformation across the department. We want to help

defense leaders see that AI is about generating essential warfighting

advantages. AI is not IT (information technology). It's not a black

box that a contractor's going to deliver to you. It's not some digital

gadget that an IT rep will show you how to log into.

Our primary implementation challenge is the hard work of decision

engineering. It's commanders' business at every level and in every

defense enterprise. How do you make warfighting decisions? What data

drives your decision-making? Do you have that data? Do you have access

to it? If -- it's -- it's driving leaders to think, "You know, I could

make a better decision if I knew 'X'."

JAIC wants to help leaders at every level get to that "X". We want

to data-informed, data-driven decisions across warfighting and

functional enterprises. We want to understand the enemy and ourselves,

and benefit from data-driven insight into what's -- what happens next.

We want the generation of tempo to respond to fast-moving threats across

multiple domains. We want recursive virtualized war-gaming and

simulation at great fidelity. We want successful teaming among manned

and unmanned platforms, and we want small leaders – or small unit

leaders that go into harm's way to go with a more complete understanding

of their threats, their risks, their resources, and their

opportunities.

We're grateful to Congress. We'll – we're grateful to DOD

leadership, the enthusiastic service members who – who are helping us

with this, and the American people for their continued trust and

support.

I really appreciate your attention and look forward to your questions. Thank you very much.

STAFF: Thank you, sir. Appreciate that. We'll go up to the phones

now. The first question is going to come from Sydney Freedberg from

Breaking Defense. Go ahead, Sydney.

Q: Hello, General. Sydney Freedberg here from Breaking Defense.

Thank you for doing it. And apologies if we ask you to repeat yourself a

little bit because those of us on the phone line were not dialed in

until you'd started speaking.

You know, you have talked repeatedly about the importance of this

being commanders' -- AI being commanders' business, about the importance

of this not being seen as, you know, nerd stuff. How – how have you

actually socialized, institutionalized that across the Defense

Department? I mean, clearly, there's a lot of high-level interest from,

you know, service chiefs in AI. There's quite a lot of lip service, at

least, to AI and people in the briefing slides. But how do you really

familiarize for, not the technical people, but the commanders with the

potential of this? You know, once we added the JAIC, we're – we're from

a fairly limited number of people. You don't have – you can't send a

missionary out to every office, you know, in the Pentagon to preach the

virtues of AI.

GEN. GROEN: Yeah, great – great question, Sydney. And – and so this

– this really is the heart of the implementation challenge. And so

getting commanders, senior leaders across the department to really

understand that this is not IT. AI is not IT. This is warfighting

business. It is assessment and analysis – analysis of warfighting

decision-making or enterprise decision-making in our support

infrastructure and in our business infrastructure.

If you – if you understand it that way, then – then we open the doors

to – to much better and much more effective integration into our

warfighting constructs, our service enterprises, our support enterprises

across the department, and we really start to – to get traction.

This is why our focus on – on the joint common foundation, because

what we find – I – I think there are two aspects that I think are

important: the joint common foundation, which provides a technical

platform. So now we have a technical platform. It'll – it'll become

IOC (initial operating capability) here early in – in 2021, and then we

will – we will – we will rapidly change it. We expect to do monthly

updates of tools and capabilities to that platform.

But that platform now provides a technical basis for especially

disadvantaged users who don't have access to data scientists, who don't

have access to algorithms, who are not sure how to leverage their data.

We can bring those – those folks to a place where now they can store

their data. They might be able to leverage training data from some

other program. We might be able to identify algorithms that can be

repurposed and reused, you know, in similar problem sets. So there's

that technical piece of it.

There's also the soft, what I call the soft services side of it,

which is now we help them with AI testing and evaluation for

verification and validation, those critical AI functions, and we help

them with best practice in that regard. We help them with AI ethics and

how to build an ethically-grounded AI development program. And then we

create an environment for, for sharing of, of all of that through best

practice.

If we – if we do that, then we will, in addition to the platform

piece of this, we're building our – we're – what we call our missions

directorate now. We are re-crafting that to be much more aggressive in –

in going out to find those problems, find those most compelling use

cases across the department that then we can bring back home and help

that user understand the problem, help that user get access to

contracting vehicles, help that user access to technical platform and do

everything we can to facilitate AI – a thousand AI sprouts across the

department so that it really starts to take hold and we start to see the

impact on decision-making.

STAFF: Thanks, sir. The next question is coming from Khari Johnson

of VentureBeat. Khari, if you're still on the line, go ahead, sir.

He's not on the line, so we're going to go the next question, which

is from Jasmine from National Defense. Jasmine, if you're still on the

line go ahead.

Q: Thank you, sir.

I do know defense companies faced a volley of attacks from

adversarial nations attempting to steal their IP (intellectual property)

and get peeks at sensitive information. How is the JAIC keeping the

important work it does with industry, safe from these countries or bad

actors who may want to steal and replicate it?

GEN. GROEN: Yeah, great question, Jasmine.

And, you know, we're reminded every day that the Artificial

Intelligence space is a competitive space and there's a lot of places

that we compete. I probably, the first thing I would throw out there is

cybersecurity and you know obviously we participate along with the rest

of the department in our cybersecurity initiative here in the

department; to defend our networks, to defend our cloud architecture, to

defend our algorithms.

But in addition to that we have developed a number of cybersecurity

tools that we can help that industry detect those threats. And then the

third thing I'd throw on there is our efforts now to secure our

platform, so obviously we'll use defense-certified accessibility

requirements. What we're focused on is building a trusted ecosystem.

Because one of the things that will make this powerful is our ability

to share. So we have to be able to ascertain our data. We have to know

its provenance. We have to know that the networks that we pass that

data on are sound and secure. We have to create an environment where we

can readily move through, you know, containerization or some other

method; developments or codes that's done in one platform to another

platform.

So to do all of this securely and safely is a primary demand signal

on the joint common foundation and it is on all of our AI developments

across the department, in the platforms, the other platforms that are

out there across the department. We are wide awake to the threat posed

by foreign actors especially who have a proven track record of stealing

intellectual property from wherever they can get their hands on it;

we're going to try to provide an effective defense to ensure that

doesn't happen.

STAFF: Okay, the next question is going to go out to Brandi Vincent from NextGov. Go ahead, Ma'am.

Q: Hi. Thank you so much for the call today.

My question is on the Joint Common Foundation. You mentioned these

soft services that it'll have and I read recently that there will be

some, to keep users aware of ethical principles and other important

considerations they should make when using AI in warfare.

Can you tell us a little bit more about how the platform will be

fused with the Pentagon's ethical priorities? And from your own

experience, why do you believe that that's important?

GEN. GROEN: Yeah, great question.

And I really, I think this is so important, and I tell you, I didn't

always think that way. When I came into the JAIC job I had my own

epiphany about the role of an AI ethical foundation to everything that

we do and it just jumped right out at you. Many people might think

well, yeah, of course, you know we do things ethically so when we use AI

we'll do them ethically as well.

But I think of it through the lens of, just like the law of war; the

law of war, you know, the determination of military necessity, the

unnec— limiting unnecessary suffering; all of the principles of the law

of war that drive our decision-making actually has a significant impact

on the way that we organize and fight our force today and you can see

it; anybody, you know – the fact that we have a very mature targeting

doctrine and a targeting process that is full of checks and balances

helps us to ensure that we are complying with the law of war.

This process is unprecedented and it is thoroughly ingrained in the

way we do things. It changes the way we do business in the targeting

world. We believe that there's a similar approach for AI and ethical

considerations. So when you think about the AI principles or its

ethical principles, these things tell us how to build AI and then how to

employ them responsibly.

So when we think about building AI we want to make sure that our

outcomes are traceable. We want to make sure that it's equitable. We

want to make sure that our systems are reliable and we do that through

test and evaluation in a very rigorous way. But then we also want to

ensure that as we employ our AI that we're doing it in ways that are

responsible and that are governable. So we know that we're using an AI

within the boundaries in which it was tested for example. Or we use an

AI in a manner that we can turn it off or we can ask it in some cases,

hey, how sure are you about that answer? What is your assessment of the

quality of the answer you provide? And AI gives us the window to be

able to do that.

Honestly, we and the nations that we're working with in our AI

partnership for defense really are kind of breaking ground here for

establishing that ethical foundation and it will be just as important

and just as impactful as application of the law of war is on our

targeting doctrine, for example. So if you have that it's really

critical then. There are not that many experts, ethicists who really

understand this topic and can communicate it in a way that helps

designers design systems, help testers test systems, and help

implementers implement them.

And so we have some of them in the JAIC; they're fantastic people and

they punch way above their weight. We're really helping – hoping

they'd give access to their expertise across the department by linking

it to the Joint Common Foundation. Thanks for the question. I think

that's a really important one.

STAFF: So the next question goes out to Jackson Barnett of FedScoop. Jackson, go ahead, sir.

Q: Hi. Thank you so much for doing this.

Could you say, what is your expectation or even baseline requirement

for what everyone needs to understand about AI; when you talk about

trying to enable AI across the department, what is it that you hope that

those, be they commanders out in the field or people working in the

back-office parts of the Pentagon, what do people need to know about AI

for your vision of enabling AI across the department to work?

GEN. GROEN: Yeah, great question, Jackson.

So the most important thing I think is what I alluded to in my

opening comments; that AI is about decision-making. Not decision-making

in the abstract but decision-making in the finite, in the moment, in

the decision with the decision-maker, that really defines like how do I

want to make that decision? What process do I use today? And then what

data do I use to make that decision today?

In many cases, historically, a lot of our war-fighting decisions are

made kind of by seat-of-the-pants. Judgment, individuals with lots of

experience, mature understanding of the situation, but doing

decision-making without necessarily current data. We can fix that; we

can make that better and so ways for us to do that, you know we have to

help people visualize what AI means across the department and what an AI

use case looks like.

It's really easy for me to start at the tactical level. You know, we

– we want weapons that are more precise, we want weapons that guide on

command, you know, to human-selected targets, we want threat detection –

automatic threat detection and threat identification on our bases, we

want better information about the logistic support that is available to

our small units, we would like better awareness of the medical situation

– you know, perhaps remote triage, medical dispatch, processes – you

know, everything that you just imagine that you do in a – in a

commercial environment today here in the United States, we want to be

able to do those same things with the same ease and the same reliability

on the battlefield – you know, reconnaissance and scouting for – you

know, with unmanned platforms, you know, equipment that's instrumented,

that's going to tell us if it – if it thinks it will fail in the next –

you know, in the next hour or the next flight or whatever – team members

that – that have secure communications over small distances.

You know, that – all of that tech exists today and if you move up the

value chain, you know, up into the, you know, like, theater, like

combatant command decision support, you know, visibility of data across –

across the theater, what an incredible thing that would be to achieve,

available at the fingertips of a combatant commander at any time.

Today, those combatant commanders, really on the – alone and

unafraid, in many cases – during – in the geographical regions around

the world, have to make real time decisions based on imperfect

knowledge, and – and – and so they – they do the best – they can but I

think our combatant commanders deserve better than that. They should be

able to decide based on data where we have data available and where we

can make that data – data available for them – things, like, at a

service level, you know, a human capital management, you know, think

"Moneyball," right? Like I need that kind of person for this job, I'm

looking for an individual with this kind of skills. Where can I find

such a person, when is that person going to rotate?

The services that we can provide service members – you know, I – I – I

don't know how many -- how many man hours I've spent standing in lines

in an administration section, you know, in my command, you know, waiting

for somebody to look at my record book or a change in a -- you know, an

allowance or something like that. Why – why do we do that? You know, I

haven't set a foot in a bank for years. Why would I have to set foot

into an admin's section to be able to do these kinds of processes?

This is kind – you know, this is the broad visualization that

includes, you know, support and enabling capabilities but it extends all

the way to warfighting decision making that – you know, that – it’s

necessary, right? It's – we have to – we have to do this. It will make

us more effective, more efficient.

STAFF: Thank you, sir. The next question comes from Lauren Williams from FCW. Lauren, if you're on the line, go ahead, Ma'am.

Q: Yes, thank you for – for doing this, sir.

As you're talking about these new capabilities, the – the data

strategy came out and obviously, like, data is a very important part of

making AI work. Can you talk a little bit about what the JAIC is going

to be doing in the near future, like when we can expect to see, you

know, in terms of implementing the data strategy and what the JAIC's

role is going to be there?

GEN. GROEN: Great – great question, Laura.

So the – so the data strategy, you know, for – for those of you who –

who don't know, that's – comes from the Chief Data Officer (CDO), so

within – within the – the Chief Information Officer suite.

And so what the – what the CDO organization has done is – is – has

kind of created a – a – a vision and a – and a strategy for how are we

going to manage the enormous amount of data that's going to be flowing

through our networks, that's going to be coming from our sensors, that's

going to be generated and curated for AI models and everywhere else we

use data?

You can't be data-driven as a department, you can't do data-driven

warfighting if you don't have a strategy for how to manage your data.

And so through – as – as we established the – the Joint Common

Foundation but also as we help other customers, you know, execute AI

programs within – you know, within their enterprises, we will help the –

the CDO implement that strategy, right? So things like data sharing.

So data sharing is really important. In an environment where we have

enormous amounts of data available to us broadly across the department,

we need to make sure that data is available from one consumer to

another consumer, and -- and hand in hand with that is the security of

that data. We need to make sure that we have the right security

controls on the data, that data is shared but it's shared within a

construct that we can protect the data.

One of the worst things that we could do is create stovepipes of data

that are not accessible across the department and that – that result in

the department spending millions and millions of dollars, you know,

re-analyzing data, re-cleaning data, you know, re-purposing data when –

when that data is already available.

So we're working with the CDO and then we'll work across the – the AI

executive steering group to figure out ways, how do we – how do we not

only share models but how do we share code, how do we share training

data, how do we share test and evaluation data? These are the kind of

things that a data strategy will help us kind of, you know, put the

lines in the road so we can do it effectively but do it safely at the

same time.

STAFF: Thank you, sir.

We've got two other journalists on the line and I want to try to get

to that before we've got to cut off. So the next question is going to

go to Scott from Federal News Network. Scott, if you're on the line, go

ahead, sir.

Q: Hi, General. Thanks for doing this.

You know, just curious about your priorities for 2021. You know,

you're getting more money than you were a couple of years ago,

considering that your – your organization is growing. You've started to

work within some of the combat areas. So, you know, where are you

going to be investing money and where are we going to see the JAIC start

to grow?

GEN. GROEN: Great question, Scott.

So – so what – as we look at – you know, one of the challenges of

kind of where we are in this evolution of the JAIC and the department is

we – we have a – a – a pipeline of use cases that is way more, you know

– vastly exceeds our resources.

And so this is part of our enablement process. We want to – you

know, we want to find the most compelling use cases that we can find,

the things that are most transformational, the things that will have the

broadest application and the things that will lead to, you know,

innovation in this space.

And so there's a balance here that we are – that we're trying to

achieve. On the one hand, we're working some very cutting-edge AI

technologies with consumers and – some pretty mature consumers –

consumers who are, you know, a – you know, working at – at the same

level we are and in partnership. On the other side of the coin, we have

– we have partnerships with really important enterprises and

organizations who haven't even really started their journey into AI.

And so we've got to make sure that we have the right balance of

investment in high tech AI that moves the state of the art and shows the

pathway for additional AI development and implementation, and then also

helping consumers, you know, with their first forays into the AI

environment, and that – and that includes things like, you know, doing

data readiness assessments.

So as I mentioned in my opening remarks, when – you know, we're

recrafting our missions directorate to – to – you know, we're creating

flyaway teams, if you will, that can – that can fall in on a – a – an

enterprise or a – or a potential AI consumer and help them understand

their data environment, help them understand what kind of things that

they're going to have to do to create an environment that – that can

support an artificial intelligence set of solutions. So we'll help them

with that. And when we're done helping them with that, then we'll help

find them the AI solution.

In an unlimited budgetary environment, we might build that algorithm

for them. In a limited budget environment, sometimes the best things we

can do is look – link them to a contractor who may have a demonstrated

expertise in their particular – particular use case. In some cases, it

may just be helping them find a contract vehicle so that they can bring

somebody in. In any case, we'll inform them with the ethical

standards. We'll inform them with best practices for test and

evaluation. We'll help them do their data analysis.

And so our resourcing now is spread between high-end use cases and

use cases that we're – that we're building, because we – you know,

because purposefully, we want to build those to – to meet specific

needs. The common foundation and building that common foundation, and

then helping a broader base of consumers take AI on board and start to,

you know, start to respond to the transformation by looking at their own

problem sets facilitated by us. So we'll have to – we’ll have to – you

know, it's a very, it's a very nuanced program of, how do you spread

the resourcing to make sure all of those important functions are

accomplished? Thanks for the question.

STAFF: Thank you, sir.

This will be the last question. This question comes from Peter from

ACIA TV. And we just have a couple of more minutes here, so Peter, if

you could go ahead with your question, sir.

Q: Absolutely. Thank you very much.

I wanted to ask about the security of algorithms, and how you attempt

to deal with – one of the biggest problems in AI is the way it's

over-matching to the data, in that you will have to keep algorithms

secure, and so periodically, update and reduce – and – and renew them.

What fear do you see in over-matching, or even under-matching if you

know that you have to throw out a bunch of data?

GEN. GROEN: Yeah, that's – that’s a great question.

Primarily, you know, what – you know, we – we are, we are limited in

the data we have, in many cases, and the good data, the good, labeled

data, the good – you know, the well-conditioned data. And so helping us

– us kind of creating the standards and the environment so we can build

high-quality data is – is an important step that we'll accomplish

through the JCF, and we'll help other consumers to – in that same – in

the same role.

But then -- but then once we have good data, we have to protect it.

So we protect it through the – you know, we – we – we had the security

conversation a little while ago, but we protect it through the right

security apparatus so that we – we can share effectively, yet ensure

that that data remains protective. You know, we have to protect test

and evaluation data. We have to protect labeled and condition data for a

lot of different reasons – for operational reasons, for technical

reasons, and because it's a valuable resource. We have to protect the

intellectual property of government data, and how we use that

effectively to – to ensure that we have access to rapid and – and

frequent algorithm updates, yet without paying a proprietary price for

data that the government doesn't own or the data the government gave

away. We want to make sure that we have a – a – an environment that

makes sense for that – for that – for that situation.

I – what your question kind of reminds all of us, though, is, you

know, the – the – the technology of adversarial AI, the opportunities

for AI exploitation or spoofing or deception, you know, that – that

research environment is very robust. Obviously, we pay very close

attention. We do have a – a – a pretty significant powerhouse bench of

AI engineers and experts who – in – in data science, as well, who – who

are – who keep us up-to-date and keep us abreast of all of the

developments in those threatening aspects of artificial intelligence,

and we work those into our processes to the – to the degree we can. We

are very sensitive to the idea of over-conditioned or overmatched data.

We are very sensitive to the issues of AI vulnerability and a – and

adversarial AI, and we're – and we're trying to build and work in, how

do we build robust algorithms?

In many cases, the science of responding to adversarial AI and the

threat that it poses is a very immature science, and so from an

implementation perspective, we find ourselves, you know, working with

our – especially our academic partners and our – our industry partners

to really help us understand where we need to go as a department to make

sure that our AI algorithms are safe, are protected and our data is –

is – is the same: safe, protected, and usable when we need to use it.

All of these are – are – are artifacts of AI implementation, but the

department is learning as we go, and the JAIC is trying to -- to kind of

show the way and get the conversation going across the department so we

don't have to discover it, you know, serially. We can discover in

parallel with all of us kind of learning together.

So we'll – you know, we'll – we'll keep pushing that, but your – your

point is very well-taken, and it's an important consideration for us,

is making sure that we have reliability in the – in the outcomes of all

of our artificial intelligence efforts.

STAFF: All right. Thank you, ladies and gentlemen. Thank you, General Groen, for your time today.

Just as reminder for the -- the folks out on the line, this broadcast

will be replayed on DVIDS (Defense Visual Information Distribution

Service), and we should have a transcript up on defense.gov within the

next 24 hours. If you have any follow-on questions, you can reach out

to me at my contacts. Most of you have those, or you can contact the

OSD(PA) (Office Secretary of Defense – Public Affaris) duty officers.

Folks, thank you very much for everybody for attending today.